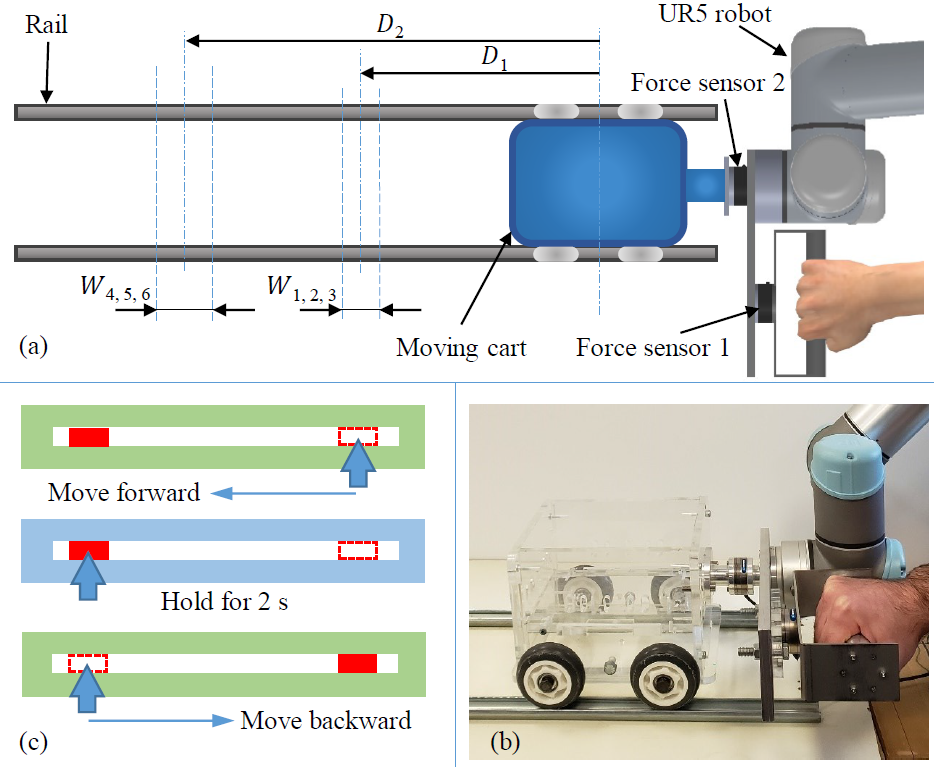

A Computational Multicriteria Optimization Approach to Controller Design for Physical Human-Robot Interaction

Physical human-robot interaction (pHRI) integrates the benefits of human operator and a collaborative robot in tasks involving physical interaction, with the aim of increasing the task performance. However, the design of interaction controllers that achieve safe and transparent operations is challenging, mainly due to the contradicting nature of these objectives. Knowing that attaining perfect transparency is practically unachievable, controllers that allow better compromise between these objectives are desirable. In this study, we propose a Pareto optimization framework that allows the designer to make informed decisions by thoroughly studying the tradeoff between stability robustness and transparency.

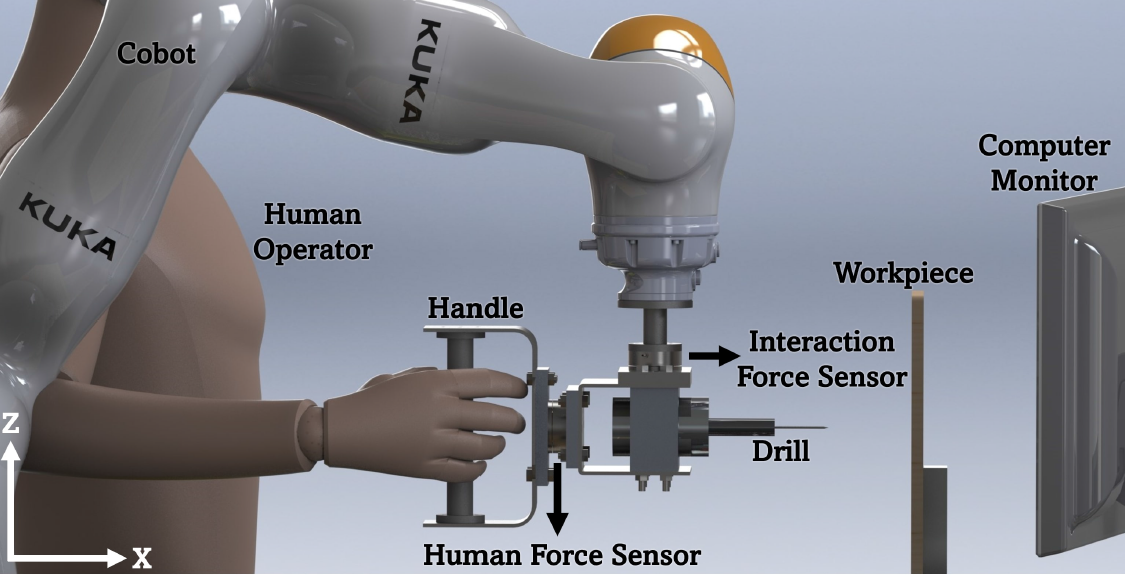

An Adaptive Admittance Controller for Collaborative Drilling with a Robot Based on Subtask Classification via Deep Learning

We have developed a supervised learning approach based on an Artificial Neural Network (ANN) model for real-time classification of subtasks in a physical human-robot interaction (pHRI) task involving contact with a stiff environment. In this regard, we consider three subtasks for a given pHRI task: Idle, Driving, and Contact. Based on this classification, the parameters of an admittance controller that regulates the interaction between human and robot are adjusted adaptively in real time to make the robot more transparent to the operator (i.e. less resistant) during the Driving phase and more stable during the Contact phase. The Idle phase is primarily used to detect the initiation of task.

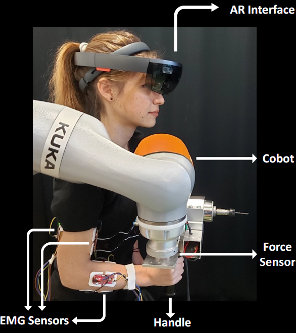

Detecting Human Motion Intention during pHRI Using Artificial Neural Networks Trained by EMG Signals

The collaboration between human and cobot can be enhanced by detecting the intentions of human to make the production more flexible and effective in future factories. In this regard, interpreting human intention and then adjusting the controller of cobot accordingly to assist human is a core challenge in physical human-robot interaction (pHRI). In this study, we propose a classifier based on Artificial Neural Networks (ANN) that predicts intended direction of human movement by utilizing electromyography (EMG) signals acquired from human arm muscles. We employ this classifier in an admittance control architecture to constrain human arm motion to the intended direction and prevent undesired movements along other directions.

Adaptive Human Force Scaling via Admittance Control for Physical Human-Robot Interaction

The goal of this study is to design an admittance controller for a robot to adaptively change its contribution to a collaborative manipulation task executed with a human partner to improve the task performance. This has been achieved by adaptive scaling of human force based on her/his movement intention while paying attention to the requirements of different task phases. In our approach, movement intentions of human are estimated from measured human force and velocity of manipulated object, and converted to a quantitative value using a fuzzy logic scheme. This value is then utilized as a variable gain in an admittance controller to adaptively adjust the contribution of robot to the task without changing the admittance time constant.

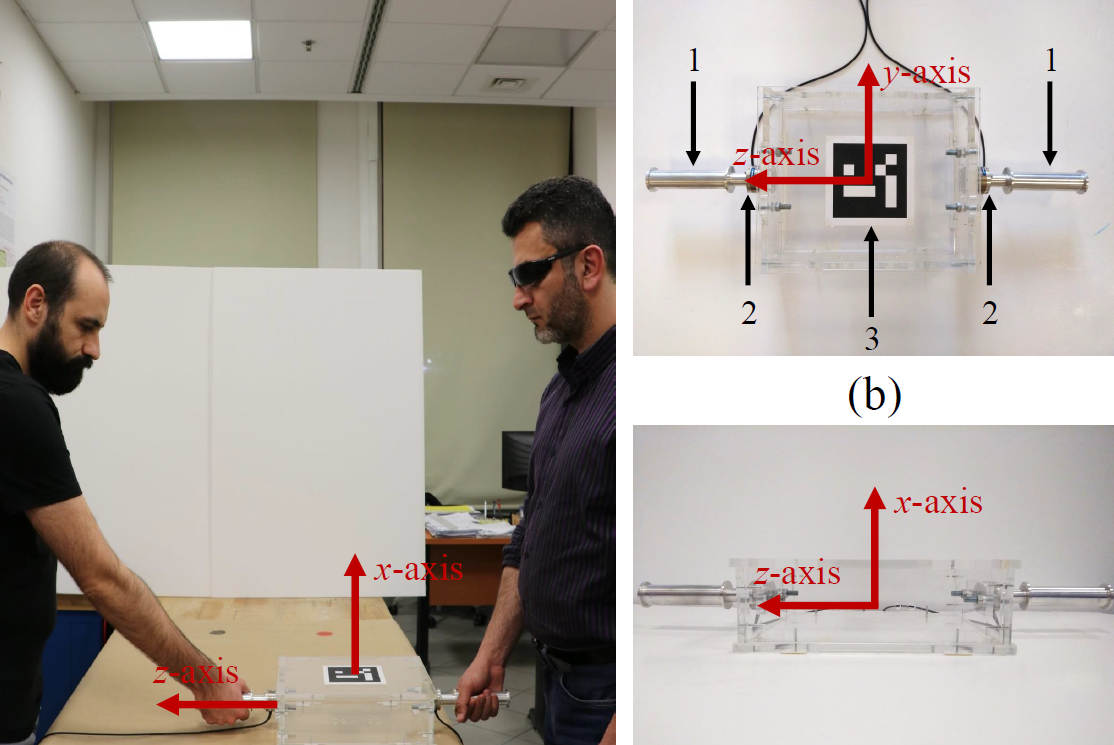

A Novel Haptic Feature Set for the Classification of Interactive Motor Behaviors in Collaborative Object Transfer

This study explores the prominence of haptic data to extract information about underlying interaction patterns within physical human-human interaction (pHHI). We work on a joint object transportation scenario involving two human partners, and show that haptic features, based on force/torque information, suffice to identify human interactive behavior patterns. We categorize the interaction into four discrete behavior classes. These classes describe whether the partners work in harmony or face conflicts while jointly transporting an object through translational or rotational movements.