A Novel Haptic Feature Set for the Classification of Interactive Motor Behaviors in Collaborative Object Transfer

Koc University Human-Human Interaction Behaviour Pattern Recognition Dataset

This repository contains raw and labelled haptic interaction data collected from human-human dyads in a joint object manipulation scenario.

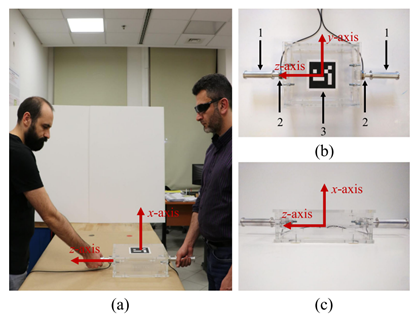

In our study*, we report evidence that harmonious and conflicting behavior patterns in physical human-human interaction (pHHI) can be recognized using haptic information alone without the need to track the manipulated object. In doing so, we design an experimental study where two human partners physically interact with each other to manipulate an object (see Fig. 1).

*Al-saadi, Z., Sirintuna, D., Kucukyilmaz, A., Basdogan, C., 2021, “A Novel Haptic Feature Set for the Classification of Interactive Motor Behaviors in Collaborative Object Transfer”, IEEE Transactions on Haptics, DOI: 10.1109/TOH.2020.3034244

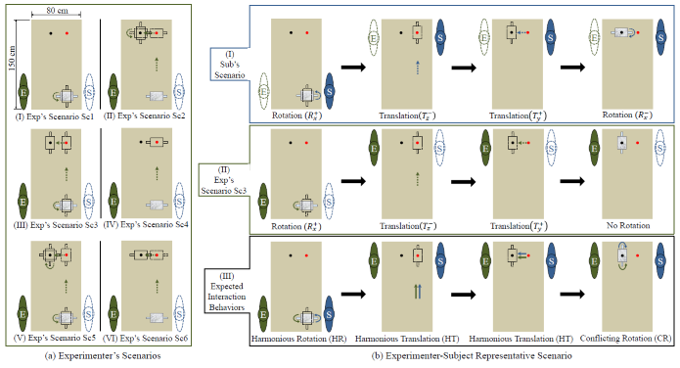

The experimenter, on the other hand, was given different target configurations, defining six different scenarios (Figure 2 shows the six scenarios (Sc1-Sc6) followed by the experimenter and a representative experimenter-subject scenario (Sc3) with the expected behaviors). Each of these six scenarios aims at isolating more than one of the following four specific interaction patterns:

- Harmonious Translation (HT)

- Conflicting Translation (CT)

- Harmonious Rotation (HR)

- Conflicting Rotation (CT)

Downloads

We conducted a study with 12 subjects (8 males and 4 females with an average age of 28.4 ± 7.0 SD). Each dyad (i.e., experimenter-subject) performed 12 trials, where scenarios Sc1-Sc6 were executed twice. The data was recorded at a frequency of 1 kHz.

Raw Interaction Data

The feature sets are stored as Matlab *.mat files; each contains 30 columns storing the haptic-based features.

Based on the collected data, you can animate the experiment using the following dataset and animation code: