Koc University Human-Human Interaction Behaviour Pattern Recognition Dataset

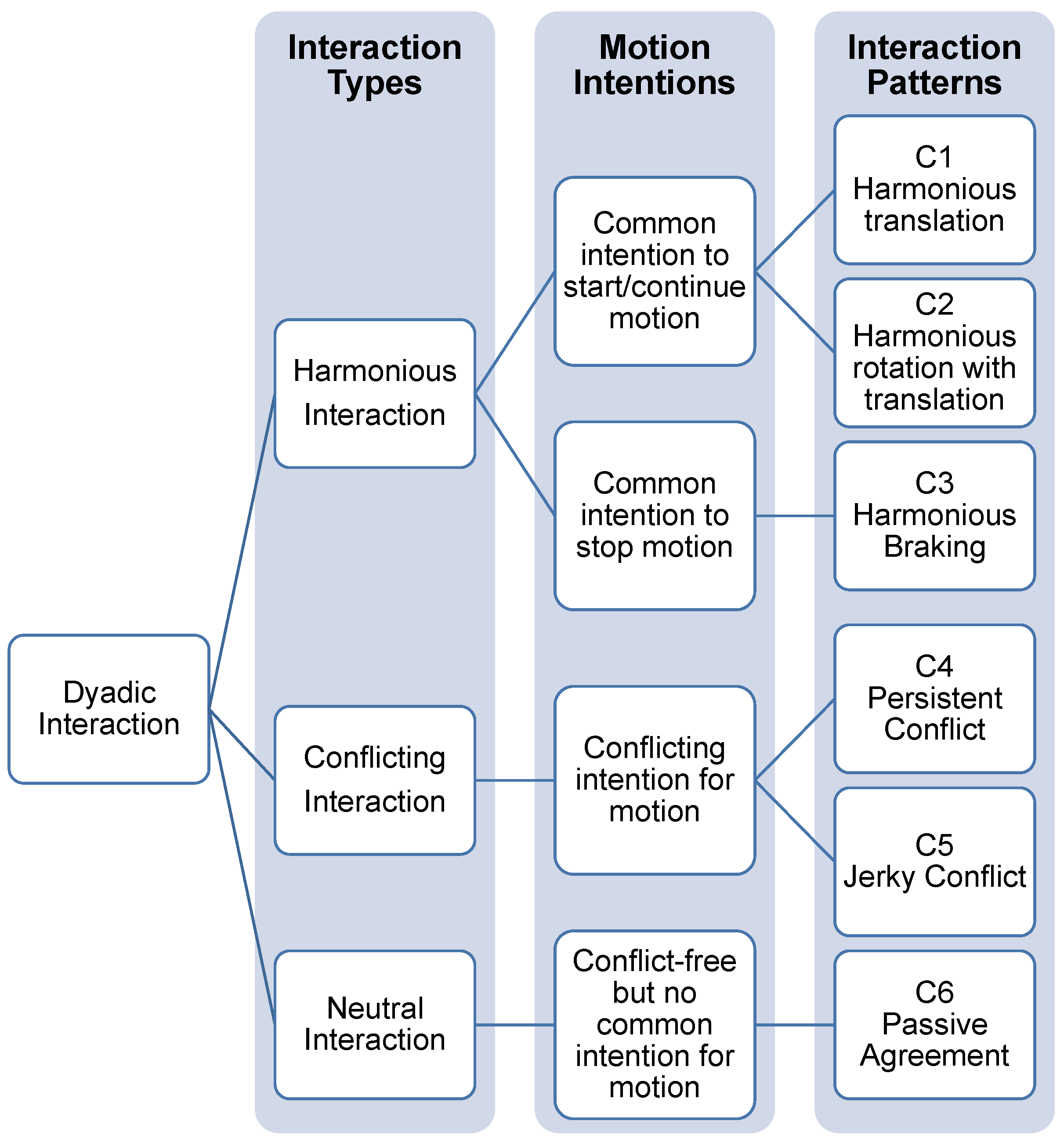

This repository contains labelled haptic interaction data collected from Human-Human dyads in a joint object manipulation scenario. We conducted an experimental study to generate data that can be used to identify human-human haptic interaction patterns and learn models for capturing salient characteristics of dyadic interactions.

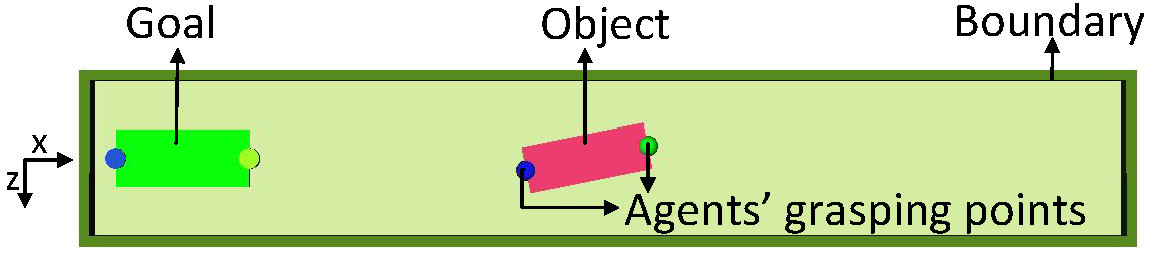

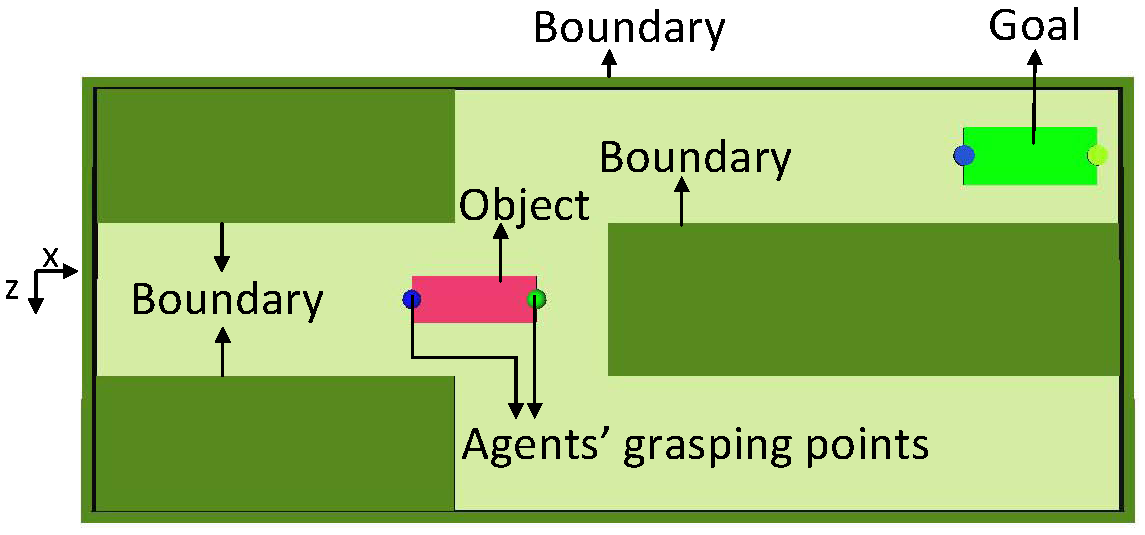

In order to identify human interaction patterns, we have developed an application where two human subjects interact in a virtual environment through the haptic channel using SensAble Phantom Premium haptic devices. The application requires the subjects to coordinate their actions in order to move the rectangular object together in a 2D maze-like environment:

Please click on the following visuals to get more information on the experimental scenarios in each scene:

Downloads

40 subjects (6 female and 34 male), aged between 21 and 29, participated in our study. The subjects were randomly divided into two groups to form dyads that should work as partners during the experiment. Hence, this dataset consists of data collected from 20 human-human dyads. For each dyad, the data is recorded at 1 kHz and stored as a Matlab struct.

Raw Interaction Data

Due to large data size, the videos are not available online. However, you can reconstruct the simulated trials by running this Matlab code.

The feature sets are stored as Matlab .mat files, each of which is a 6×1 cell array. Cells 1 to 6 respectively contain instances from classes C1 to C6 as depicted in Fig. 2 above.

Cigil E. Madan, Ayse Kucukyilmaz, T. Metin Sezgin, and Cagatay Basdogan. Recognition of Haptic Interaction Patterns in Dyadic Joint Object Manipulation. IEEE Transactions on Haptics, 2015. vol.8, no.1, pp.54,66, Jan.-March 2015. [ Bibtex ] doi: 10.1109/TOH.2014.2384049

The experimental procedure, and the details of how data is collected can be found in the aforementioned paper. May you have any queries, please direct them to Ayse Kucukyilmaz via e-mail:a.kucukyilmaz [at] imperial.ac.uk

The dataset is collected at Koc University (Robotics and Mechatronics Laboratory and Intelligent User Interfaces Laboratory). The copyright of the data remains with this institution.